-

[coding] Notes on space complexityML engineer/Papers & CS generals 2023. 2. 6. 00:19반응형

🕓 4 mins read

# Notes on SW developer recruiting

Although we are not hiring at the moment, the last year was a year of heavy recruiting. I'm currently a senior ML engineer and I've been involved in quite a few technical(a.k.a. coding tests) interviews over the last 2 years. I was pretty surprise to see that many fresh grad candidates were well prepared for the most of the tree/graph traversal problems, but far less prepared for the complexity analysis.

It's true that modern computing systems come with heavy hardware support that it became quite common to find backend developers with ignorance to the space complexity of their products. (As long as the latency is good, they are happy with the result) However, when it comes to a large distributed systems where large numbers of programs running concurrently on each node, with heavy traffics, the seemingly huge VRAMs can run out in short period of time after deployment.

So as long as the technical interview goes, I want to point out for the future candidates(mostly fresh grads I mean,) the importance of correctly knowing what space complexity your program has and they should be aware of simple way of measuring and optimizing your program. Interviewers will be keen on turning down candidates with little or no knowledge of this aspect on developing softwares.

# What is Space Complexity?

Space complexity is a way of analyzing a program's performance, measuring ho much space(memory) it needs to run. As I've mentioned previously, modern computers do have abundant memory so the time complexity is more critical compared to the space complexity in most cases.

## Why Important?

Let's put aside how it's important to optimize programs' memory usage as it's pretty obvious. Then why do technical interviews ask for the interviewers to understand their code's space complexity?

With the growing tendency of candidates using python, javascripts, and other non-compiled languages with abundant libraries on the fly, we are seeing more applicants who are fond of heavily depending on library funcitons/modules instead of implementing all the details themselves. Yes, it's not something we should discourage, as it's not efficient to reinvent the wheel every time. However, without ever knowing the internals of these "wheels" excessive use can lead to abuses, affecting the programs performance badly.

Why the emphasis on the space complexity, but not the time complexity? Most, at least whom I've met so far, was keen on the time complexity as they are quite noticeable on the code itself, but the space complexity is not so observable and so they become prone to being unnoticed.

So I'd say it's good to use all the resource you can, but at the same time you should be aware of what really is happening under the hood. Any way, we only have some 20 minutes or so for each coding test problems, so these complexity analysis can be a good proxy of knowing in a short time if the candidates really understand what they've written.

# Measuring Space complexity? (Big-O)

I won't be discussing in detail how the asymptotic analysis works and the detailed definition of big O notations.

Let's just put the concept of big-O as a simple way of measuring the upper bounds.

a = 100In general, creating a variable is considered as 1 unit of space. One thing to note here is that if that variable's memory usage does not increase as the input data size increases, we consider it as a "constant" space usage.

Take a look at this simple factorial function.

def get_factorial(n: int) -> int: result = 1 if n < 3: return n for i in range(2, n+1): result *= i return resultIn the above code, even when the for loop iterates $n$ times, the variable `result` will always be a single integer, so the space complexity of this code is $O(1)$.

Then we say that this "constant" space usage as $O(1)$, that it will not grow proportional to the input data size.

However if we change the above code into the following, things become quite different.

def get_factorial(n: int) -> int: if n > 1: return n * get_factorial(n-1) else: return 1This is where many candidates fail to remember.

The code seems to have $O(1)$ space complexity, but when does this function finally return?

The recursive call starting from $n$ will expand until we reach get_factorial(1) where the recursion stops, returning 1. Then we will need $n$ stacked call to this function. So this function's space complexity will grow linear to the input size, so the big O is $O(n)$. If you didn't know, it'll be a perfect chance now to understand that each function call requires its own variable scope, which results in an additional memory usage.

# Reducing Space Complexity

Of course there is no single way to reduce space complexity for all algorithms as the space complexity is not decided soley on the size of the recursive function calls.

- How large an array can grow?

- How much dynamic allocation is expected?

However if we are considering the case of technical interviews, there are some basic rule of thumbs we can expect from the problems. A space usage of a program can be seen as the following two large category.

- Fixed space

- Variable space (dynamic)

When we ignore the time complexity, it's usually efficient to use data structures that use variable space, which would reduce average memory usage when we can cut down the usage most of the time.

Also when we create a function, all the local variables and the dynamically allocated objects all require memory space. So all the recursive call to such function will keep creating it's own scope of variables and objects, so when it's possible to implement the same logic into both iterative and recursive solution, iterative solution will be more efficient space complexity wise.

# Keep in mind

We've talked about the main roles a space complexity analysis plays in a technical interviews, and checked how we can measure and optimize space complexity.

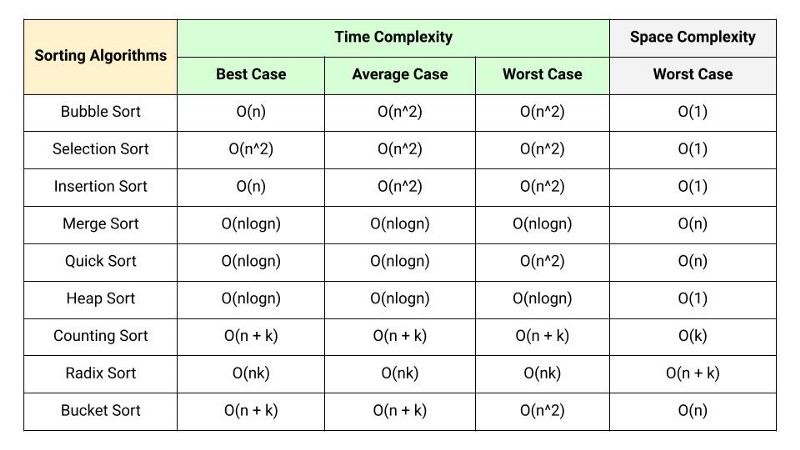

If you are a student preparing for a technical interview for the first time, I see many just memorizing the tables like below. If asked, they just shoot the best, average and the worst case complexity in 2 seconds. No that's not how technical interview works. NO ONE CARES about THE TABLE. Interviewers want to know if you really UNDERSTAND what code you have written, and filter out the false candidates who might have just got LUCKY to be asked a question that they've already memorized, solved before, who gives?

Even if you got lucky enough to fool the whole interview and got the job, it'll do you no justice in the long run. You'll most likely fail to get away with it once you've actually executed a real project.

https://www.enjoyalgorithms.com/blog/comparison-of-sorting-algorithms What's really important is to know the internals of the tools you are using. If you know the in and out of the data structures and the algorithms, then you'll have no problem with the space complexity analysis anyway.

반응형'ML engineer > Papers & CS generals' 카테고리의 다른 글